Experience raises what-if moments AI might catch—but also deeper questions only humans think to ask.

By Rick Schrenker

I’ve been retired for almost five years. While working, I wrote a bit about medical technology risk management and systems engineering, including for magazines like 24×7. I frequently shared my concerns about the rate of change in technology and the need to get technology requirements right, especially requirements associated with the “ilities” (dependability, reliability, maintainability, verifiability, etc) and safety.

So, given the title of this article, you might think this is a hit piece on artificial intelligence (AI). But I am not writing this to bury AI—rather, to praise it—at least to get started.

AI Might Have Caught It

Ten or so years ago, I was admitted to a hospital for foot cellulitis, a potentially dangerous infection. After almost two weeks of IV antibiotics, an MRI, and a bone biopsy, I was scheduled to have a peripherally inserted central catheter (PICC) inserted and go home to continue the therapy. I had been walking around for days on the floor using crutches and was to be sent home with those as well; a physical therapist even taught me how to navigate stairs.

Just before going to radiology to have the PICC inserted, after which I would go directly home, another physical therapist came by. I proudly showed her how I could navigate with crutches.

“You can’t use crutches with a PICC!” As soon as she said it, I understood. A PICC goes up your arm, across your shoulder, and down into a large vein. A crutch under the armpit could crush the PICC. A very close call. I went home with a walker instead.

Since then, I’ve wondered why the EMR hadn’t picked up on my simultaneous orders for a PICC and crutches. I now wonder if an AI-enabled system could have caught it. It certainly seems possible to me.

Years before, I made a mistake in how I collected data for a study of a blood pressure transducer, which resulted in the study not being published. I closed an article on learning from mistakes by sharing the story of that mistake and wondering how I could have avoided it. At the time (and ever since), the only thing I could come up with was that I should have had my colleagues review my plan. Now I wonder if AI could have helped me avoid the error. That seems possible as well.

So I’m of the opinion, apparently with just about everyone else who has written on the topic, that AI has a lot to offer health care in general and health care technology in particular, especially if its training data emphasizes “First, do no harm.”

Looking at AI with Experience

But I’m far too familiar with technology and how technology systems fail to let it go at that. AI wasn’t even a blip on the horizon for most people when I retired; now it’s ubiquitous in health care and everywhere else. When I turned my attention to it a year or so ago, I brought with me my bias that believes the rate of change of technology has been accelerating and challenging to keep up with in a practical sense, eg, how to ensure user and maintenance training remain adequate.

I also brought along the sliver of knowledge I obtained about neural networks when I finished my MSEE in 1988, particularly my concerns around validation and verification of the performance of systems that can modify themselves based on what they “learn.”

I will keep this short: After reading about AI for a year, I’m more concerned now than I was when I started. In part, that’s because I’m no longer at the pointy end of the stick and place what I read in the context of the care environment of five years ago (and before COVID). I can’t relate anymore.

I don’t add AI-enabled devices to an inventory (my understanding is that AI-enabled devices currently must be “locked down” to receive 510k clearance). I don’t see manuals on how to verify their performance. I don’t see user training manuals, attend in-services, or hear comments from physicians, nurses, or technologists. I don’t know the actual practical implications of AI to clinical engineering and healthcare technology management. So I’m not going to “cry wolf” because things seem to have changed so much in just five years that, in all good conscience, I can’t.

Questions Worth Asking About AI

I will, however, leave you with some things to ponder.

First, a question that came to my mind a while ago: Imagine you’re in a regional delivery system and change out all of your infusion pumps to ones that are AI-enabled. Now imagine they are not “locked down” but can modify themselves based on what they learn in the process of delivering fluid therapies of various types. Is it possible, as a result, that pumps of the same make and model can operate differently? If so, what constraints can be placed on changes? Will it be evident that the code has changed to a user or technician? Should that generate a new version number? What, if anything, should users know about the potential for differences between pumps?

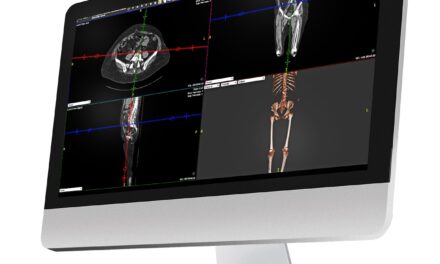

Second, my reading suggests there are at least ongoing discussions around using AI with medical equipment management database systems to, for instance, drive inspection and preventive maintenance scheduling. I looked at a lot of equipment and work order records during my career and have to ask whether the field really believes the data in those systems is of sufficient quality to support AI-based decision making.

I’ll stop there. Some, if not all, of these are likely to have trivial answers. But there may be questions that aren’t trivial yet have consequential answers, and it’s up to you to ask them.

I’ll end with some links generated in response to queries for “AI failures in healthcare technology” and “AI failures in medical devices” for you to consider:

- https://medicalxpress.com/news/2024-08-fda-ai-medical-devices-real.html

- https://www.jdsupra.com/legalnews/4-common-ways-that-ai-driven-medical-3346085

- https://www.axios.com/2025/03/12/ai-fails-health-predictions-study

- https://www.axios.com/2023/12/20/ai-bias-diagnosis

- https://www.fmai-hub.com/the-hidden-crisis-when-healthcare-ai-fails-in-silence

- https://jamanetwork.com/journals/jama-health-forum/fullarticle/2815239#google_vignette

- https://psnet.ahrq.gov/perspective/artificial-intelligence-and-patient-safety-promise-and-challenges

- https://learn.hms.harvard.edu/insights/all-insights/confronting-mirror-reflecting-our-biases-through-ai-health-care

- https://pmc.ncbi.nlm.nih.gov/articles/PMC9110785

- https://24x7mag.com/standards/regulations/ecri-institute/ai-tops-2025-health-technology-hazards-list/

- https://www.mq.edu.au/research/research-centres-groups-and-facilities/healthy-people/centres/australian-institute-of-health-innovation/news-and-events/news/news/ai-enabled-medical-devices-raise-safety-concerns

- https://www.medical-device-regulation.eu/wp-content/uploads/2020/09/machine_learning_ai_in_medical_devices.pdf

- https://www.dll-law.com/blog/ai-medical-malpractice-risk-in-catastrophic-injury-cases.cfm#:~:text=Diagnostic%20Errors%20AI%20systems%20that%20analyze%20medical,a%20mammogram%2C%20leading%20to%20delayed%20cancer%20treatment.

- https://www.i-jmr.org/2024/1/e53616

https://www.frontiersin.org/journals/digital-health/articles/10.3389/fdgth.2024.1279629/full - https://www.nih.gov/news-events/news-releases/nih-findings-shed-light-risks-benefits-integrating-ai-into-medical-decision-making

- https://www.newscientist.com/article/2462356-ai-chatbots-fail-to-diagnose-patients-by-talking-with-them

About the author: Rick Schrenker is a former clinical engineer with more than four decades of experience at Johns Hopkins Hospital and Massachusetts General Hospital. He holds a BSE and MSEE from Johns Hopkins University and has focused his career on medical device interoperability, risk management, and systems engineering. A cofounder of the Baltimore Medical Engineers and Technicians Society, he is a life member of IEEE and a senior member of AAMI. Questions and comments can be directed to 24×7 Magazine chief editor Alyx Arnett at [email protected].

ID 367313423 | Ai © Weerapat Wattanapichayakul | Dreamstime.com